Claude Code Agents Double Session Length and Auto‑Approve Rate as Autonomy Grows

Updated (5 articles)

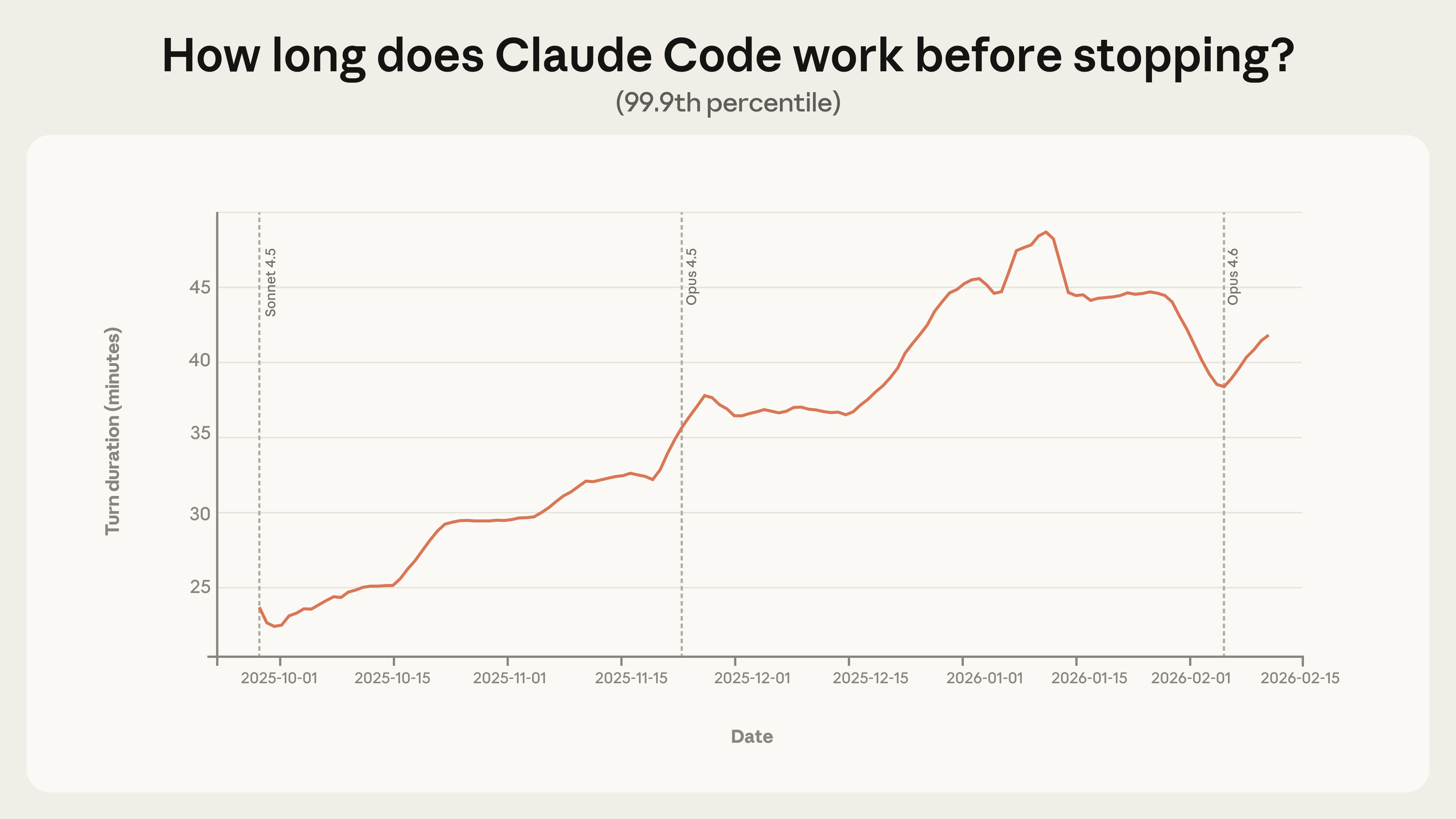

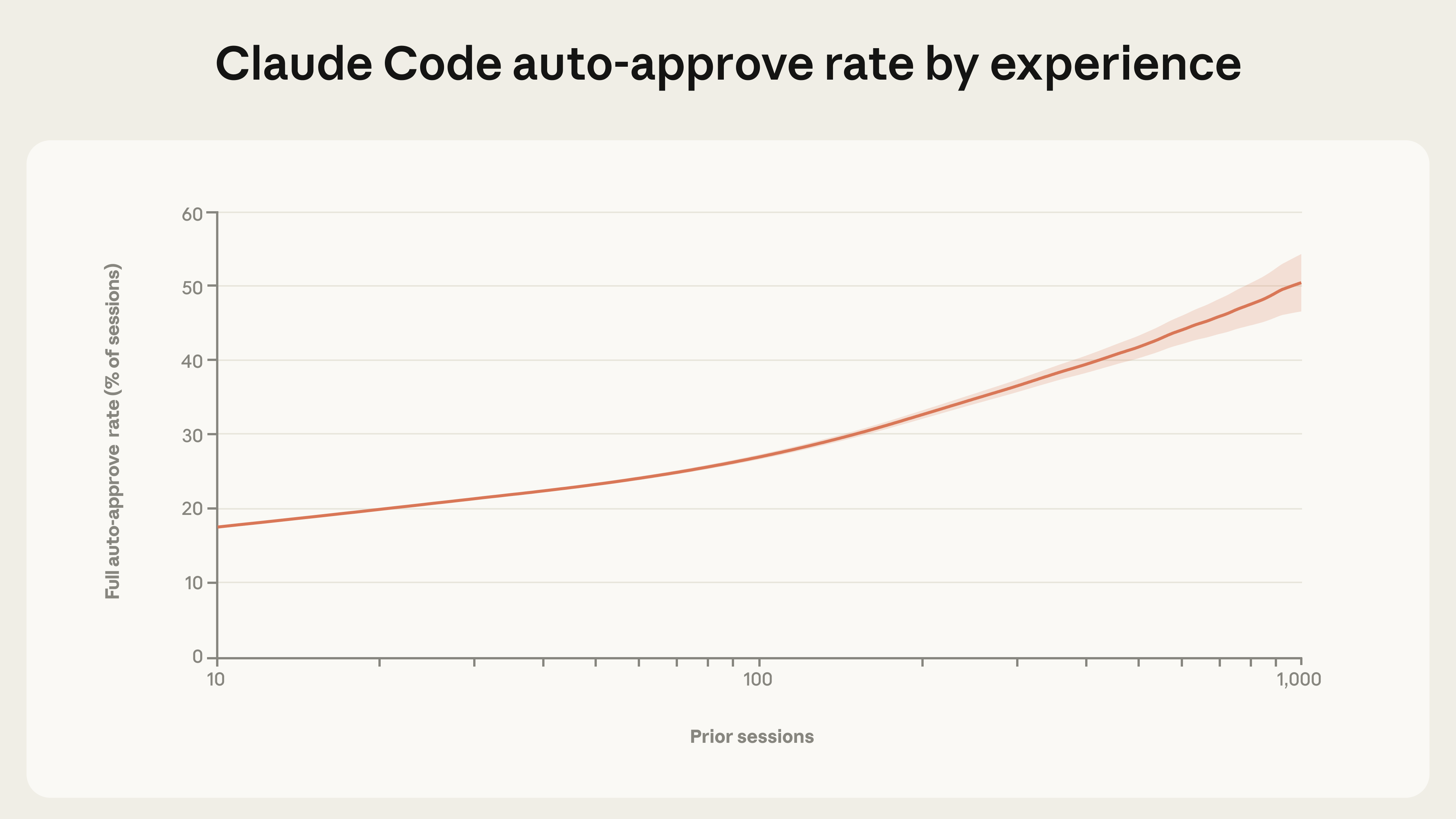

Session Length and User Trust Expand Rapidly The 99.9th‑percentile turn duration rose from under 25 minutes in late September 2025 to over 45 minutes by early January 2026, indicating users allow longer autonomous runs [1]. New users (<50 sessions) employ full auto‑approve in roughly 20 % of sessions, while veterans (750 + sessions) exceed 40 % auto‑approve, reflecting growing confidence in the agent’s decisions [1]. This smooth increase across model releases suggests the change stems from user behavior rather than purely technical improvements [1].

Interrupt Patterns Shift With Experience Turn‑level interruptions climb from about 5 % for novices (≈10 sessions) to around 9 % for seasoned users, showing a move toward monitoring rather than direct approval [1]. On the most complex goals, Claude Code initiates clarification pauses more than twice as often as human‑initiated interruptions, demonstrating self‑regulation of uncertainty [1]. The higher interruption rate among experienced users aligns with deeper engagement and finer‑grained oversight [1].

Software Engineering Dominates Tool Calls While Risks Remain Low Nearly 50 % of public‑API tool calls involve software‑engineering tasks, making this the primary use case for Claude Code [1]. Safeguards appear in 80 % of calls, 73 % retain a human in the loop, and only 0.8 % are irreversible, indicating that most deployments are low‑risk [1]. These risk‑mitigation statistics accompany the growing autonomy, suggesting responsible deployment practices persist [1].

Internal Testing Shows Doubling of Success on Hard Tasks Between August and December 2025, internal users’ success rate on the hardest tasks doubled, while average human interventions per session fell from 5.4 to 3.3 [1]. The reduction in interventions correlates with the model’s improved ability to handle complex problems autonomously [1]. These internal metrics reinforce the external trends of increased trust and efficiency [1].

Related Tickers

Timeline

Apr 28, 2025 – Anthropic analyzes 500,000 Claude interactions and finds Claude Code automates 79 % of tasks versus 49 % for Claude.ai, with front‑end development (JavaScript/TypeScript 31 %, HTML/CSS 28 %) dominating and startups accounting for 32.9 % of Claude Code chats, indicating a strong early‑stage bias toward lightweight, entrepreneurial coding work[5].

Aug – Dec 2025 – Internal Claude Code users double their success rate on the hardest coding problems while human interventions per session fall from 5.4 to 3.3, showing that increased model autonomy directly improves outcomes for power users[1].

Late Sep 2025 – The 99.9th‑percentile Claude Code session length sits under 25 minutes, marking the baseline before a rapid rise in user‑granted autonomy across subsequent model releases[1].

Nov 13‑20 2025 – Anthropic collects 1 million Claude.ai conversations and 1 million first‑party API transcripts to compute five new “economic primitives” (task complexity, skill level, purpose, AI autonomy, success) for its Economic Index, establishing a granular framework for measuring AI impact[3].

Dec 2 2025 – A survey of 132 engineers reports that Claude now handles roughly 60 % of daily work, boosting self‑reported productivity by 50 %; engineers claim they feel “full‑stack,” tackling front‑end, database, and API tasks they previously avoided, while also noting reduced mentor interactions and anxiety about skill erosion[4].

Early Jan 2026 – The 99.9th‑percentile Claude Code session length exceeds 45 minutes, reflecting users’ willingness to let the agent run longer, more autonomous workflows without constant oversight[1].

Jan 15 2026 – Anthropic releases its fourth Economic Index, introducing five economic primitives; it shows complex, college‑level tasks speed up up to 12‑fold but success drops to 66 %, AI coverage climbs to 49 % of sampled jobs, and revised productivity gains fall to about 1 percentage point per year; the report also launches a Rwanda‑ALX partnership piloting Claude Pro for recent graduates transitioning from coursework to broader use[2][3].

Jan 2026 – The report notes the U.S. AI Usage Gini coefficient falls from 0.37 to 0.32, projecting that state‑level parity could be reached within 2‑5 years under a proportional‑convergence model, highlighting a rapid diffusion of AI tools across the American economy[3].

Feb 18 2026 – Claude Code demonstrates growing autonomy: veteran users (>750 sessions) auto‑approve over 40 % of actions, interruption frequency rises to ~9 % as users shift to monitoring rather than approving each step, and the model initiates clarification pauses more than twice as often as humans on complex goals, while 80 % of tool calls retain safeguards and only 0.8 % are irreversible, confirming a low‑risk, software‑engineering‑focused deployment landscape[1].

All related articles (5 articles)

-

Anthropic: Claude Code agents show growing autonomy and shifting user oversight

-

Anthropic: Anthropic’s Economic Index Shows AI Boosts Complex Tasks but Deepens Occupational Gaps

-

Anthropic: Anthropic Economic Index January 2026: New Primitives Reveal AI’s Uneven Impact

-

Anthropic: AI‑driven productivity surge and growing pains at Anthropic

-

Anthropic: AI Coding Assistants Shift Toward Automation, Favor Startups

External resources (53 links)

- https://economics.mit.edu/sites/default/files/2025-06/Expertise-Autor-Thompson-20250618.pdf (cited 3 times)

- https://digitaleconomy.stanford.edu/publications/canaries-in-the-coal-mine/ (cited 2 times)

- https://doi.org/10.3982/ECTA15202 (cited 1 times)

- https://doi.org/10.48550/arXiv.2412.13678 (cited 1 times)

- https://www.hbs.edu/ris/Publication%20Files/26-011_04dcb593-c32b-4e4e-80fc-b51030cf8a12.pdf (cited 1 times)

- http://claude.ai/redirect/website.v1.47f50db7-479c-447c-ba06-141fffc11f93 (cited 7 times)

- https://cdn.sanity.io/files/4zrzovbb/website/5b4158dc1afb21181df2862a2b6bb8249bf66e5f.pdf (cited 7 times)

- http://claude.ai/redirect/website.v1.15dbdb78-c435-459d-8b8a-e16305ff300e (cited 5 times)

- https://metr.org/blog/2025-03-19-measuring-ai-ability-to-complete-long-tasks/ (cited 3 times)

- https://academic.oup.com/qje/article-abstract/140/2/1299/7959830 (cited 2 times)

- https://metr.org/blog/2025-07-10-early-2025-ai-experienced-os-dev-study/ (cited 2 times)

- http://claude.ai/redirect/website.v1.c4e491c3-b6b3-4cde-87d0-eb399505f2dd (cited 1 times)

- https://arxiv.org/abs/2311.02462 (cited 1 times)

- https://arxiv.org/abs/2407.01502 (cited 1 times)

- https://arxiv.org/abs/2412.13678 (cited 1 times)

- https://arxiv.org/abs/2502.02649 (cited 1 times)

- https://arxiv.org/abs/2503.14499 (cited 1 times)

- https://arxiv.org/abs/2506.12469 (cited 1 times)

- https://arxiv.org/abs/2512.04123 (cited 1 times)

- https://arxiv.org/abs/2512.07828 (cited 1 times)

- https://arxiv.org/pdf/2302.10329 (cited 1 times)

- https://arxiv.org/pdf/2401.13138 (cited 1 times)

- https://arxiv.org/pdf/2504.21848 (cited 1 times)

- https://arxiv.org/pdf/2507.07935 (cited 1 times)

- https://assets.anthropic.com/m/2e23255f1e84ca97/original/Economic_Tasks_AI_Paper.pdf (cited 1 times)

- https://assets.anthropic.com/m/6cd21f7d4f82afcb/original/Claude-at-Work-Survey.pdf (cited 1 times)

- https://claude.com/blog/create-files (cited 1 times)

- https://claude.com/blog/memory (cited 1 times)

- https://claude.com/blog/skills (cited 1 times)

- https://code.claude.com/docs/en/common-workflows#use-plan-mode-for-safe-code-analysis (cited 1 times)

- https://code.claude.com/docs/en/fast-mode (cited 1 times)

- https://code.claude.com/docs/en/monitoring-usage (cited 1 times)

- https://code.claude.com/docs/en/overview (cited 1 times)

- https://dl.acm.org/doi/book/10.5555/773294 (cited 1 times)

- https://docs.anthropic.com/en/docs/agents-and-tools/claude-code/overview (cited 1 times)

- https://github.com/anthropics/claude-code/blob/main/CHANGELOG.md (cited 1 times)

- https://github.com/anthropics/claude-code/issues/535 (cited 1 times)

- https://huggingface.co/datasets/Anthropic/EconomicIndex (cited 1 times)

- https://job-boards.greenhouse.io/anthropic/jobs/4502440008 (cited 1 times)

- https://job-boards.greenhouse.io/anthropic/jobs/4555010008 (cited 1 times)

- https://metr.org/ (cited 1 times)

- https://newsletter.pragmaticengineer.com/p/how-claude-code-is-built (cited 1 times)

- https://papers.ssrn.com/sol3/papers.cfm?abstract_id=5713646 (cited 1 times)

- https://platform.claude.com/docs/en/api/overview (cited 1 times)

- https://red.anthropic.com/2026/zero-days/ (cited 1 times)

- https://simonwillison.net/2025/Sep/18/agents/ (cited 1 times)

- https://support.claude.com/en/articles/13345190-getting-started-with-cowork (cited 1 times)

- https://www-cdn.anthropic.com/e5645986a7ce8fbcc48fa6d2fc67753c87642c30.pdf (cited 1 times)

- https://www.michaelwebb.co/webb_ai.pdf (cited 1 times)

- https://www.nber.org/papers/w32966 (cited 1 times)

- https://www.nber.org/papers/w33509 (cited 1 times)

- https://www.nber.org/papers/w34639 (cited 1 times)

- https://www.nber.org/system/files/working_papers/w32966/w32966.pdf (cited 1 times)