Anthropic Interviewer Captures Professionals’ Views on AI Across 1,250 Interviews

Published Cached

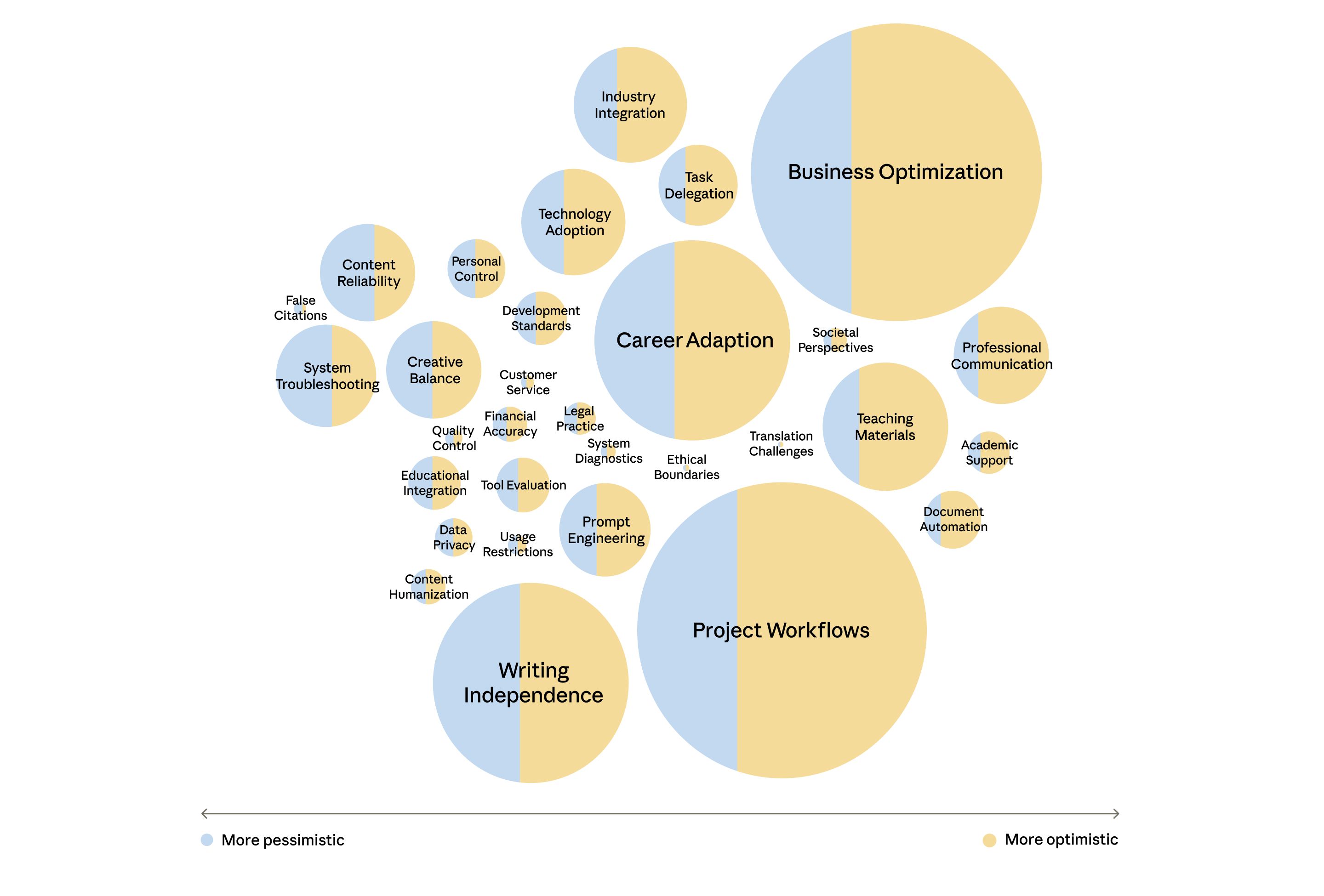

Anthropic rolls out Interviewer tool and opens data Anthropic introduced Anthropic Interviewer, an AI‑driven system that runs automated 10‑15‑minute interviews on Claude.ai and publicly released the full transcript dataset on Hugging Face for research use [1][5].

1,250 professionals surveyed across three cohorts The pilot interviewed 1,000 workers from a broad occupational mix, plus 125 creatives (writers, visual artists, etc.) and 125 scientists (physicists, chemists, data scientists, among 50+ fields) recruited via crowd‑worker platforms [1].

General workforce reports productivity gains but feels stigma 86% say AI saves time, 65% are satisfied with its role, yet 69% note workplace stigma and 55% express anxiety; 48% envision future jobs focused on overseeing AI systems [1].

Creative professionals see speed and quality boosts amid economic worries 97% report time savings, 68% claim quality improvements, while 70% grapple with peer judgment and fear of displacement, with many fearing they must sell AI‑generated content to stay afloat [1].

Scientists cite trust gaps yet desire deeper AI partnership 79% flag trust and reliability as barriers, 27% mention technical limits, but 91% want more AI help—especially hypothesis generation—though current use stays limited to writing, coding, and literature review [1].

Interview tool proves scalable but study has biases Anthropic Interviewer completed the large‑scale test at far lower cost than manual interviewing, yet the sample’s crowd‑worker recruitment, self‑report nature, and snapshot design limit generalizability and may overstate positive attitudes [1].

- Fact‑checker (general workforce): “A colleague recently said they hate AI and I just said nothing. I don’t tell anyone my process because I know how a lot of people feel about AI.” [1]

- Data‑quality manager (general workforce): “I try to think about it like studying a foreign language—just using a translator app isn’t going to teach you anything, but having a tutor who can answer questions and customize for your needs is really going to help.” [1]

- Pastor (general workforce): “…if I use AI and up my skills with it, it can save me so much time on the admin side which will free me up to be with the people.” [1]

- Novelist (creative): “I feel like I can write faster because the research isn’t as daunting.” [1]

- Information‑security researcher (scientist): “If I have to double check and confirm every single detail the [AI] agent is giving me to make sure there are no mistakes, that kind of defeats the purpose of having the agent do this work in the first place.” [1]

- Microbiologist (scientist): “I worked with one bacterial strain where you had to initiate various steps when the cells reached specific colors…the differences in color have to be seen to be understood and [instructions] are seldom written down anywhere.” [1]

Links

- [1] https://www.anthropic.com/research/anthropic-interviewer

- [2] http://claude.ai/redirect/website.v1.b6923fba-26b4-4363-8e80-0cc1d883128d/interviewer

- [3] https://www.anthropic.com/research/clio

- [4] https://www.anthropic.com/economic-index

- [5] https://huggingface.co/datasets/Anthropic/AnthropicInterviewer

- [6] https://www.anthropic.com/research/clio

- [7] http://claude.ai/redirect/website.v1.b6923fba-26b4-4363-8e80-0cc1d883128d

- [8] https://www.anthropic.com/research/clio

- [9] https://arxiv.org/pdf/2503.04761

- [10] https://www.anthropic.com/research/economic-index-geography

- [11] https://www.anthropic.com/news/claude-for-life-sciences

- [12] https://www.anthropic.com/news/introducing-the-anthropic-economic-advisory-council

- [13] https://www.anthropic.com/news/anthropic-higher-education-initiatives

- [14] https://www.anthropic.com/research/collective-constitutional-ai-aligning-a-language-model-with-public-input

- [15] https://www.las-art.foundation/programme/pierre-huyghe

- [16] https://www.mori.art.museum/jp/index.html

- [17] https://www.tate.org.uk/whats-on/tate-modern/electric-dreams

- [18] https://rhizome.org/events/rhizome-presents-vibe-shift/

- [19] https://www.socratica.info/

- [20] https://www.anthropic.com/news/model-context-protocol

- [21] https://www.anthropic.com/news/ai-for-science-program

- [22] https://www.aft.org/press-release/aft-launch-national-academy-ai-instruction-microsoft-openai-anthropic-and-united

- [23] http://claude.ai/redirect/website.v1.b6923fba-26b4-4363-8e80-0cc1d883128d/interviewer

- [24] https://www.sciencedirect.com/science/article/pii/S277250302300021X

- [25] https://www.sciencedirect.com/science/article/pii/S2451958824002021

- [26] http://claude.ai/redirect/website.v1.b6923fba-26b4-4363-8e80-0cc1d883128d

- [27] http://claude.ai/redirect/website.v1.b6923fba-26b4-4363-8e80-0cc1d883128d

- [28] https://claude.ai/redirect/website.v1.b6923fba-26b4-4363-8e80-0cc1d883128d/interviewer

- [29] https://www.anthropic.com/legal/privacy

- [30] https://privacy.claude.com/en/articles/12996960-how-does-anthropic-interviewer-collect-and-use-my-data

- [31] http://claude.ai/redirect/website.v1.b6923fba-26b4-4363-8e80-0cc1d883128d

- [32] http://claude.ai/redirect/website.v1.b6923fba-26b4-4363-8e80-0cc1d883128d

- [33] https://links.email.claude.com/s/c/cGkT1SXSNgcdo1m1Jg5ubLKEl9K6sdfmpEYqg1hKZUyGgcl4dwPfeO18GyKSSqXjh6VJYoL1jUZK6AD2BWJz4p6OxoEug7KO9W2Yl7UuTqBou7dHkuDUR9lSrGIwoWBHBu-wkEktomJDbUAT2u8a6E6foGoE2H3RIurvO9epWQ7AJsKe_0FNVxLk4ygbQL3j7y1jjTEiC2qM5p0y1YCjCVAFED8I6wYqldfi9BKBYq993W-nOs45JbAMQkT6sZUFTHhEwJTV__gENvsItJqLkEOWFL9lrz48UJTC33i6n3SqYgI6stnpAsjLF66P_qtdOX888L-0h2qzKpxlrtGvrvuqEtl5pLNkPQUulHsITd7MyVL3tf-Ubi3dJVc1h9rB7v04z5j16n5-Kbp-iwCpz3RIbNot86fAZNPdfMqyBxFRDZlAUH-iEQ7h8wY1x6k8mnegW7y77GhLsqTJiZxqIi9o511wHl1Ltu-XLpeOVd80/ql5MQfX8py3XUgI4Usb8PWvj6gyGGxly/16