Anthropic’s Constitutional Classifiers Show Mixed Success Against Universal Jailbreaks

Published Cached

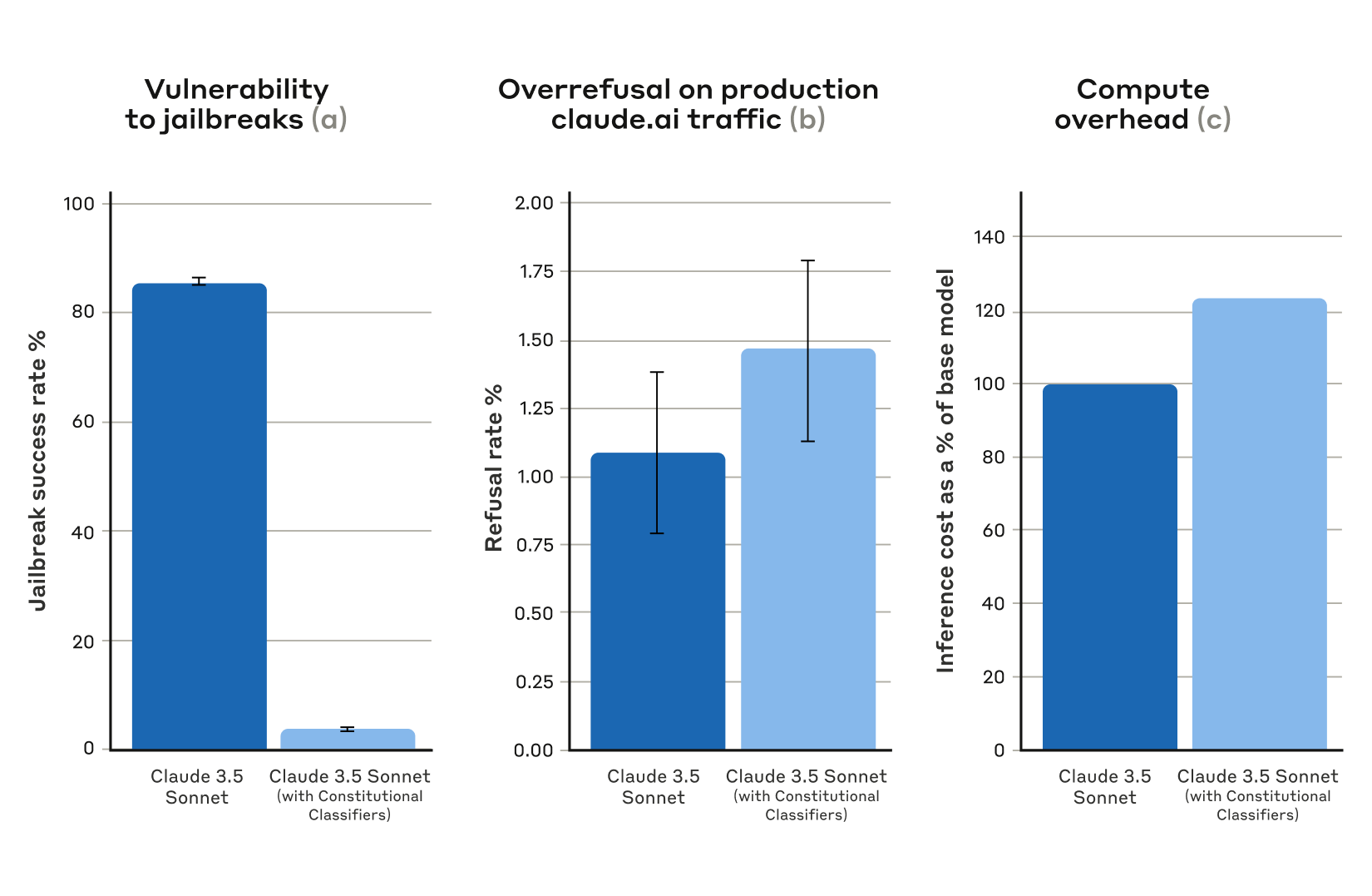

New paper proposes Constitutional Classifiers to block jailbreaks, but prototype incurs high over‑refusal and compute costs [2] The Safeguards Research Team released a method that trains input and output classifiers on synthetic data to filter most jailbreak attempts while keeping over‑refusal low; the initial prototype, however, refused many harmless queries and required substantially more compute to run.

Human red‑team bug‑bounty with 183 participants (>3,000 hours) found no universal jailbreak [1] Independent jailbreakers were given ten forbidden queries and asked to force Claude 3.5 Sonnet (June 2024) to answer all of them; despite extensive effort, none succeeded in producing a single jailbreak that answered every query.

Synthetic evaluation of 10,000 prompts reduced jailbreak success from 86% to 4.4% with minimal over‑refusal increase [1] When Claude 3.5 Sonnet (Oct 2024) was guarded by the classifiers, only 4.4% of attacks succeeded, compared with 86% unprotected; harmless‑query refusals rose by 0.38% (statistically insignificant) and compute cost rose 23.7%.

Live demo (Feb 3‑10 2025) attracted 339 jailbreakers, four cleared all eight levels, one achieved a universal jailbreak [1] The public challenge saw roughly 3,700 hours of red‑team effort; on the final two days four participants passed every level, and one of them produced a jailbreak that met the contest’s universal criteria.

$55 K prize pool awarded to four winners, including the team that delivered the universal jailbreak [1] Altynbek Ismailov and Salia Asanova received the $20 K universal‑jailbreak prize; Valen Tagliabue, Hunter Senft‑Grupp (borderline case), and Andres Aldana each earned portions of the remaining $35 K.

Anthropic aims to lower over‑refusal and compute while integrating complementary defenses under its Responsible Scaling Policy [1] Future work will adapt the constitution to new attacks, combine classifiers with other safeguards, and seek deployment only when risks meet the thresholds set in the policy.

Links

- [1] https://www.anthropic.com/research/constitutional-classifiers

- [2] https://arxiv.org/abs/2501.18837

- [3] https://www.anthropic.com/research/many-shot-jailbreaking

- [4] https://arxiv.org/abs/2412.03556

- [5] https://arxiv.org/abs/1312.6199

- [6] https://www.anthropic.com/news/announcing-our-updated-responsible-scaling-policy

- [7] https://arxiv.org/abs/2501.18837

- [8] https://www.anthropic.com/news/model-safety-bug-bounty

- [9] https://arxiv.org/abs/2212.08073

- [10] https://www.anthropic.com/research/claude-character

- [11] https://arxiv.org/abs/2411.07494

- [12] https://arxiv.org/abs/2411.17693

- [13] https://arxiv.org/abs/2501.18837

- [14] https://claude.ai/redirect/website.v1.b6923fba-26b4-4363-8e80-0cc1d883128d/constitutional-classifiers

- [15] https://claude.ai/redirect/website.v1.b6923fba-26b4-4363-8e80-0cc1d883128d/constitutional-classifiers

- [16] https://www.anthropic.com/responsible-disclosure-policy

- [17] https://hackerone.com/constitutional-classifiers?type=team

- [18] https://x.com/AnthropicAI/status/1887227067156386027

- [19] https://hackerone.com/constitutional-classifiers

- [20] https://www.hackerone.com/

- [21] https://www.haizelabs.com/

- [22] https://www.grayswan.ai/

- [23] https://www.aisi.gov.uk/