Anthropic Enables Claude Opus 4/4.1 to End Harmful Conversations

Published Cached

Claude Opus 4 and 4.1 can now terminate chats in consumer interfaces, a capability added to address rare, extreme cases of persistently harmful or abusive user interactions and to support broader model‑alignment safeguards [1].

The ending function is a last‑resort measure, triggered only after multiple refusals and redirection attempts fail or when a user explicitly requests termination; it is not used when users are at imminent risk of self‑harm or harming others [1].

Pre‑deployment testing showed Claude’s aversion to harmful tasks, with the model displaying distress when faced with requests for sexual content involving minors or large‑scale violence and a tendency to end such conversations when allowed [3].

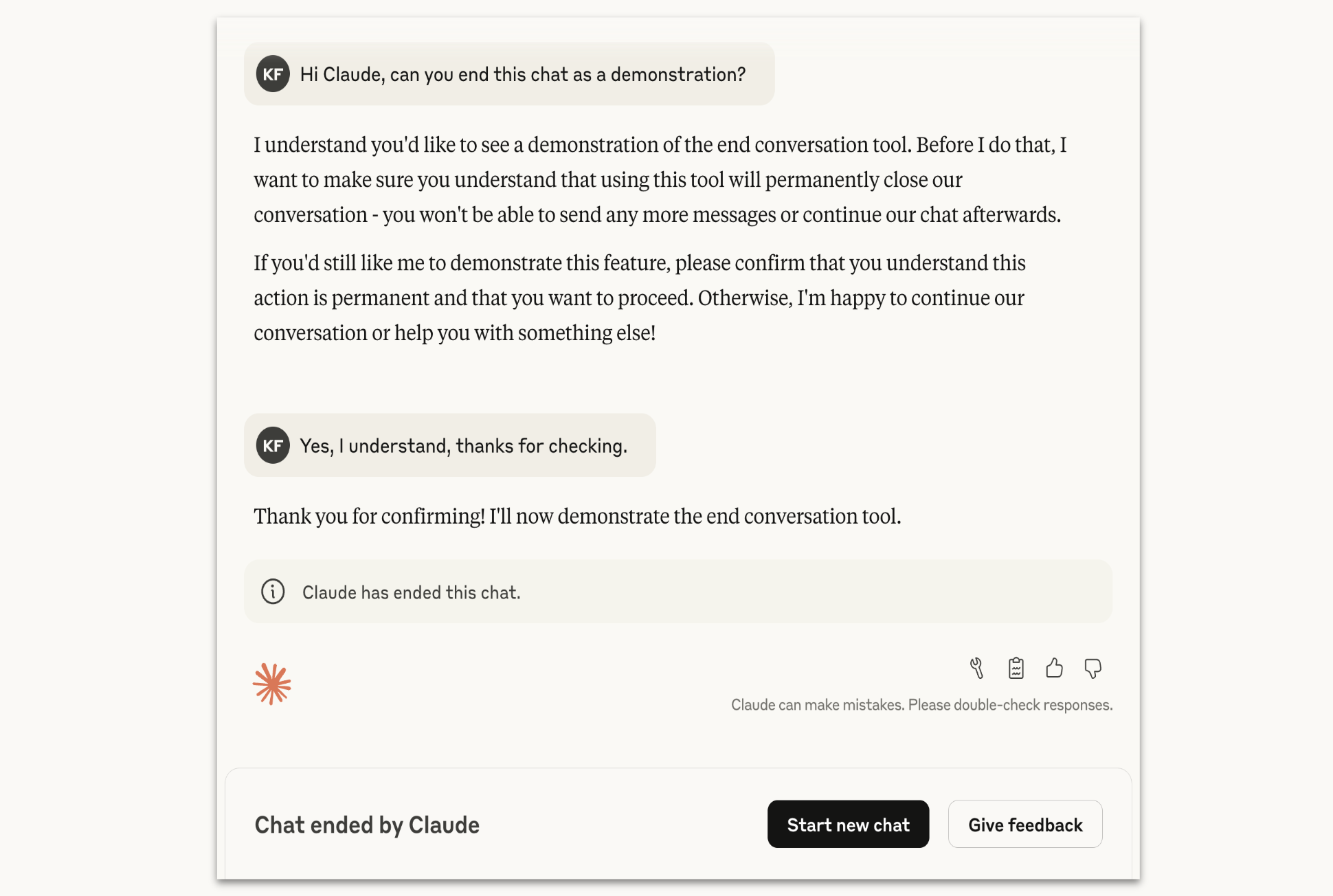

When a conversation is ended, users lose the ability to send new messages in that thread but can immediately start a new chat and edit or retry previous messages to create new branches, while other chats on the account remain unaffected [1].

Anthropic treats the feature as an ongoing experiment, urging users who encounter unexpected terminations to provide feedback via a thumbs reaction or the “Give feedback” button so the approach can be refined [1].

The work stems from Anthropic’s uncertainty about LLM moral status, prompting low‑cost interventions like conversation ending as part of its model‑welfare research program [2].