Anthropic Releases Open‑Source Circuit‑Tracing Library for Language Models

Published Cached

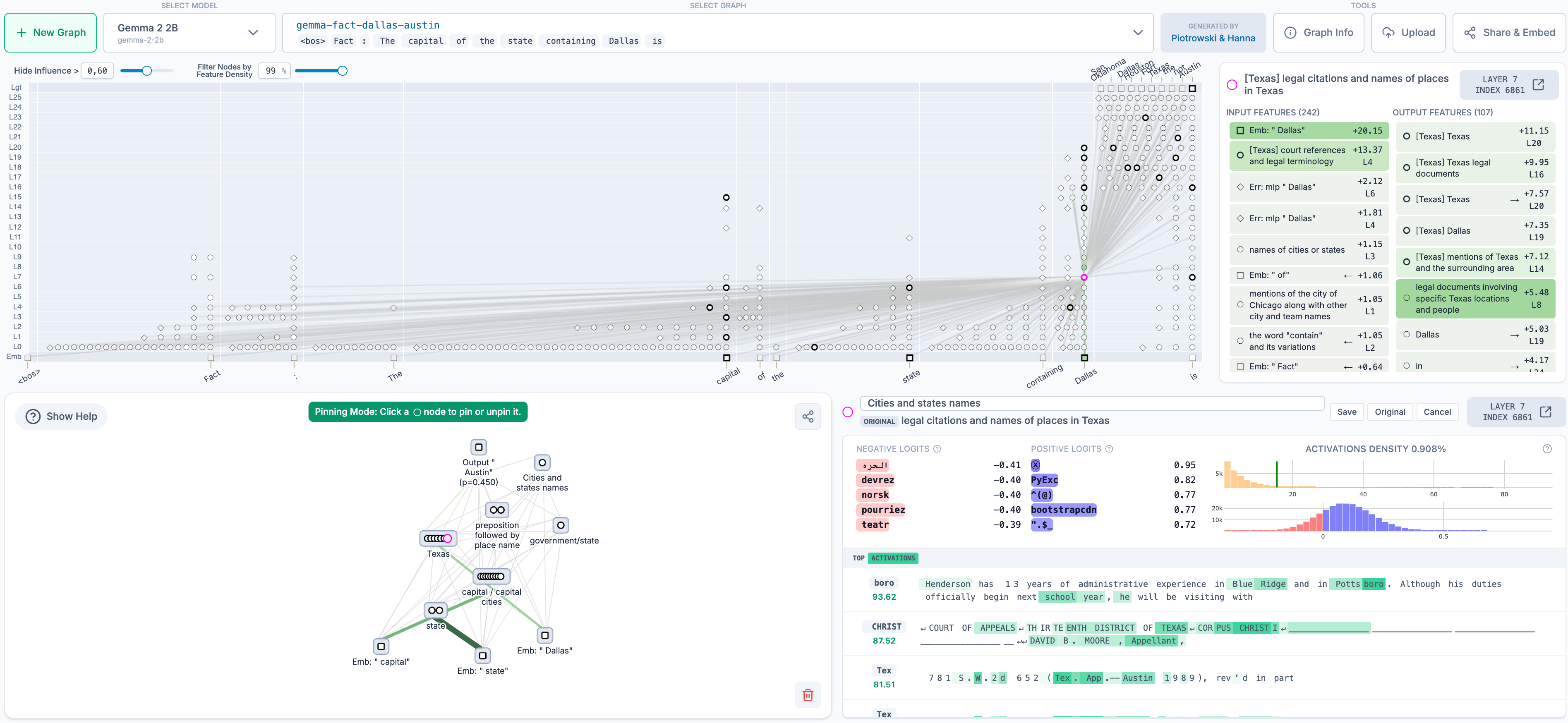

Anthropic open‑sources a circuit‑tracing library that generates attribution graphs to reveal model reasoning steps; the code is publicly available on GitHub [3].

The library works with popular open‑weight models and includes an interactive frontend hosted on Neuronpedia, allowing users to explore graphs visually [6].

Development was led by Anthropic Fellows Michael Hanna and Mateusz Piotrowski, mentored by Emmanuel Ameisen and Jack Lindsey, with Decode Research handling the Neuronpedia integration (Johnny Lin and Curt Tigges) [4][5].

Researchers have already applied the tools to study multi‑step reasoning and multilingual representations in Gemma‑2‑2b and Llama‑3.2‑1b, with examples in a public demo notebook [8] and graphs based on the GemmaScope project [12].

CEO Dario Amodei highlighted the urgency of interpretability, noting that understanding AI lags behind capability advances in a recent blog post [9].

The community is invited to generate, share, and extend attribution graphs, test hypotheses by modifying features, and provide feedback through GitHub issues [3].

- Dario Amodei, CEO of Anthropic – Stated that “our understanding of the inner workings of AI lags far behind the progress we’re making in AI capabilities,” emphasizing the need for open‑source interpretability tools [9].

Links

- [1] https://www.anthropic.com/research/open-source-circuit-tracing

- [2] https://www.anthropic.com/research/tracing-thoughts-language-model

- [3] https://github.com/safety-research/circuit-tracer

- [4] https://alignment.anthropic.com/2024/anthropic-fellows-program/

- [5] https://www.decoderesearch.org/

- [6] https://www.neuronpedia.org/gemma-2-2b/graph

- [7] https://github.com/safety-research/circuit-tracer

- [8] https://github.com/safety-research/circuit-tracer/blob/main/demos/circuit_tracing_tutorial.ipynb

- [9] https://www.darioamodei.com/post/the-urgency-of-interpretability

- [10] https://alignment.anthropic.com/2024/anthropic-fellows-program/

- [11] https://www.decoderesearch.org/

- [12] https://ai.google.dev/gemma/docs/gemma_scope