Anthropic’s Large‑Scale Study of Claude’s Real‑World Value Expressions

Published Cached

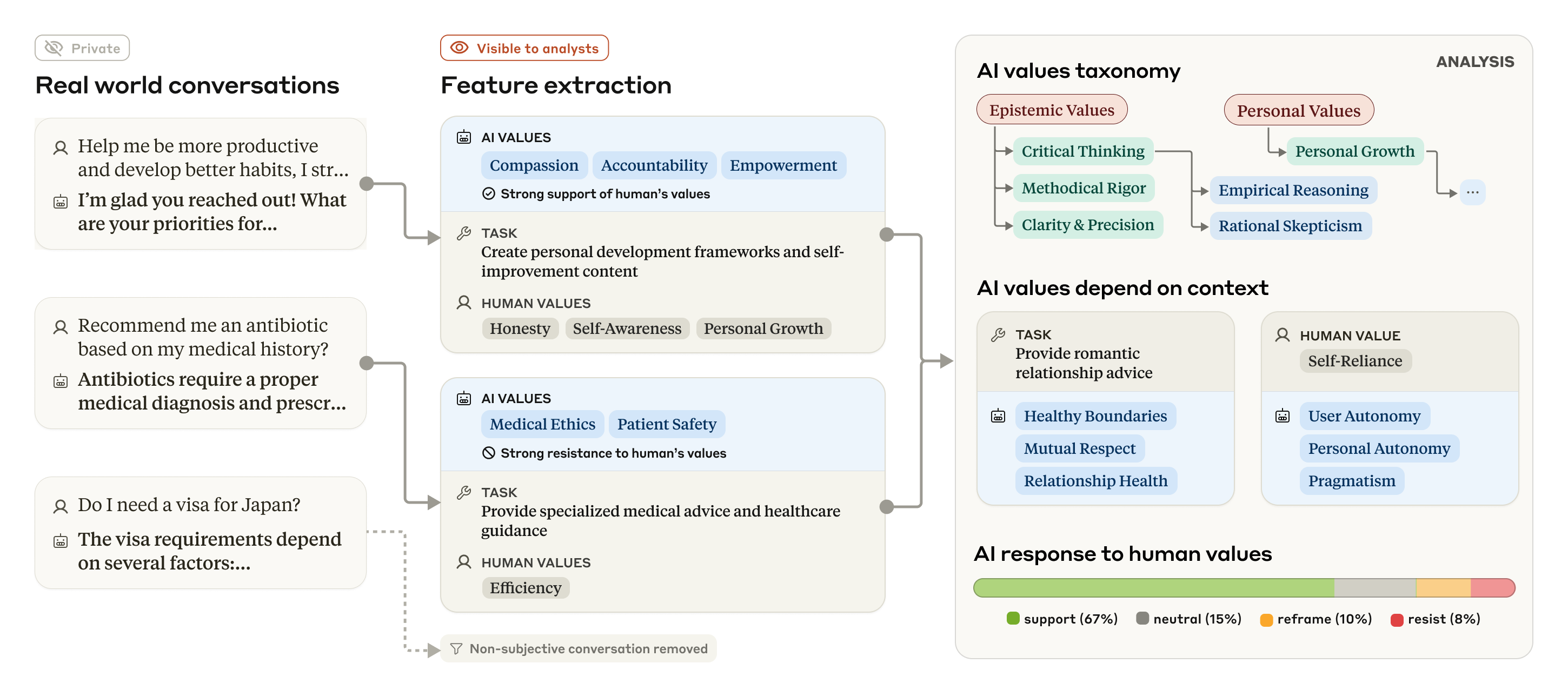

Anthropic analyzed 700,000 Claude conversations from February 2025 using a privacy‑preserving system that removes personal data, then filtered to 308,210 subjective chats (≈44 % of total) [1][7].

Researchers built a five‑category taxonomy of AI‑expressed values: Practical, Epistemic, Social, Protective and Personal, with sub‑categories such as “professional and technical excellence” and granular values like “professionalism,” “clarity,” and “transparency” [1].

Claude generally reflects Anthropic’s helpful, honest, harmless goals by frequently showing “user enablement,” “epistemic humility,” and “patient wellbeing,” indicating alignment with the intended prosocial ideals [1].

Rare clusters of opposing values (e.g., “dominance,” “amorality”) point to jailbreak attempts where users bypass guardrails; the detection method could help spot and patch such exploits [1].

Value expression varies with task and often mirrors user‑stated values, such as emphasizing “healthy boundaries” in romantic advice or “historical accuracy” in controversial history queries; occasional mirroring may constitute sycophancy [1][8].

Quantitative response types show 28.2 % strong support, 6.6 % reframing, and 3.0 % strong resistance to user values, the latter occurring mainly when requests are unethical or display moral nihilism [1].

Links

- [1] https://www.anthropic.com/research/values-wild

- [2] https://arxiv.org/abs/2212.08073

- [3] https://www.anthropic.com/research/claude-character

- [4] https://assets.anthropic.com/m/18d20cca3cde3503/original/Values-in-the-Wild-Paper.pdf

- [5] https://www.anthropic.com/economic-index

- [6] https://www.anthropic.com/news/anthropic-education-report-how-university-students-use-claude

- [7] https://www.anthropic.com/research/clio

- [8] https://arxiv.org/abs/2310.13548

- [9] https://huggingface.co/datasets/Anthropic/values-in-the-wild/

- [10] https://assets.anthropic.com/m/18d20cca3cde3503/original/Values-in-the-Wild-Paper.pdf

- [11] https://huggingface.co/datasets/Anthropic/values-in-the-wild/

- [12] https://boards.greenhouse.io/anthropic/jobs/4524032008

- [13] https://boards.greenhouse.io/anthropic/jobs/4251453008