Claude Code Agents Double Session Length and Boost Auto‑Approve as Users Gain Trust

Updated (6 articles)

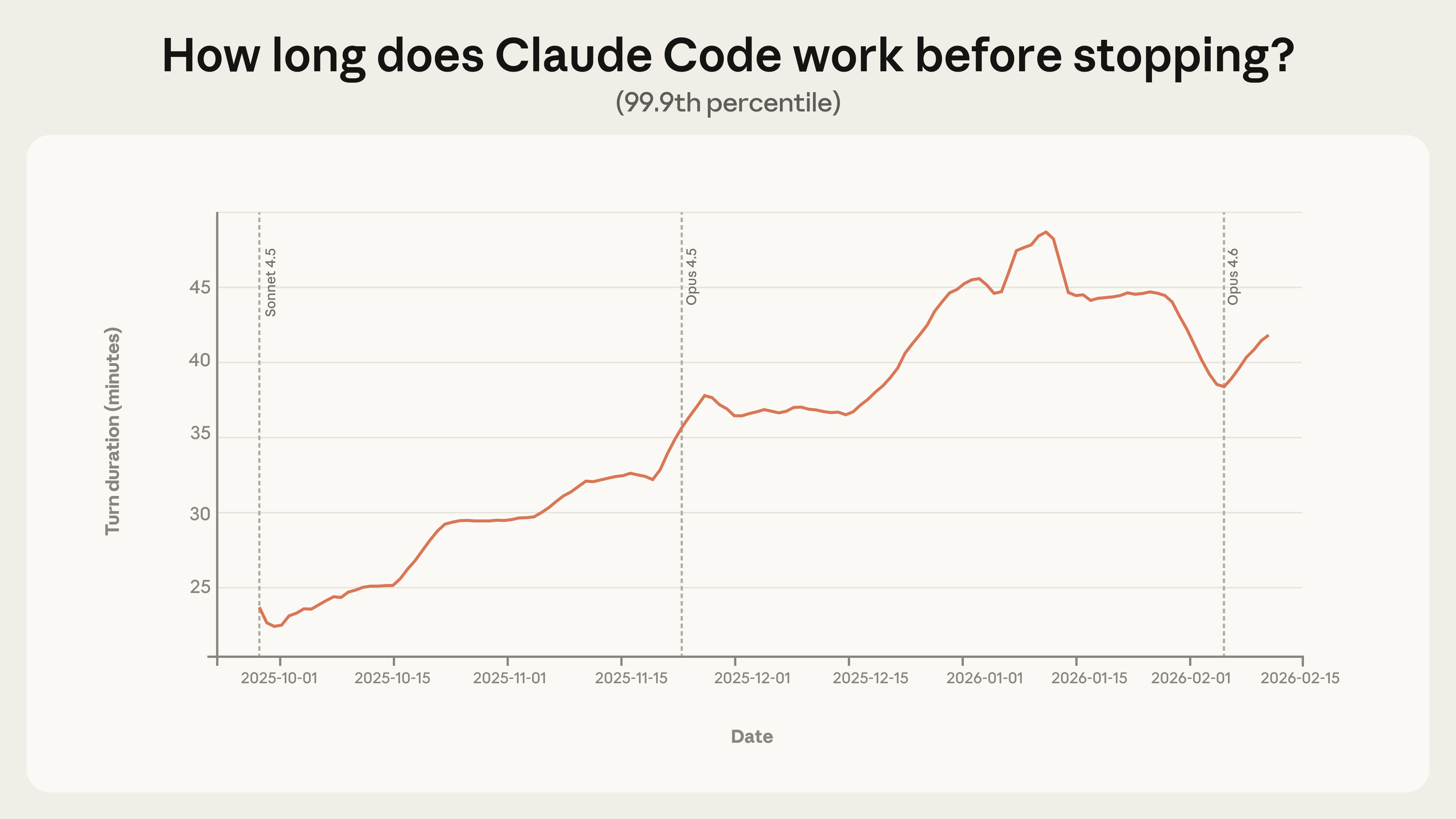

Session Length and Autonomy Grow Rapidly Claude Code’s 99.9th‑percentile turn duration rose from under 25 minutes in late September 2025 to more than 45 minutes by early January 2026, nearly doubling session length as users granted the agent greater autonomy[1]. The smooth increase spanned multiple model releases, indicating the change reflects user behavior rather than solely technical upgrades[1].

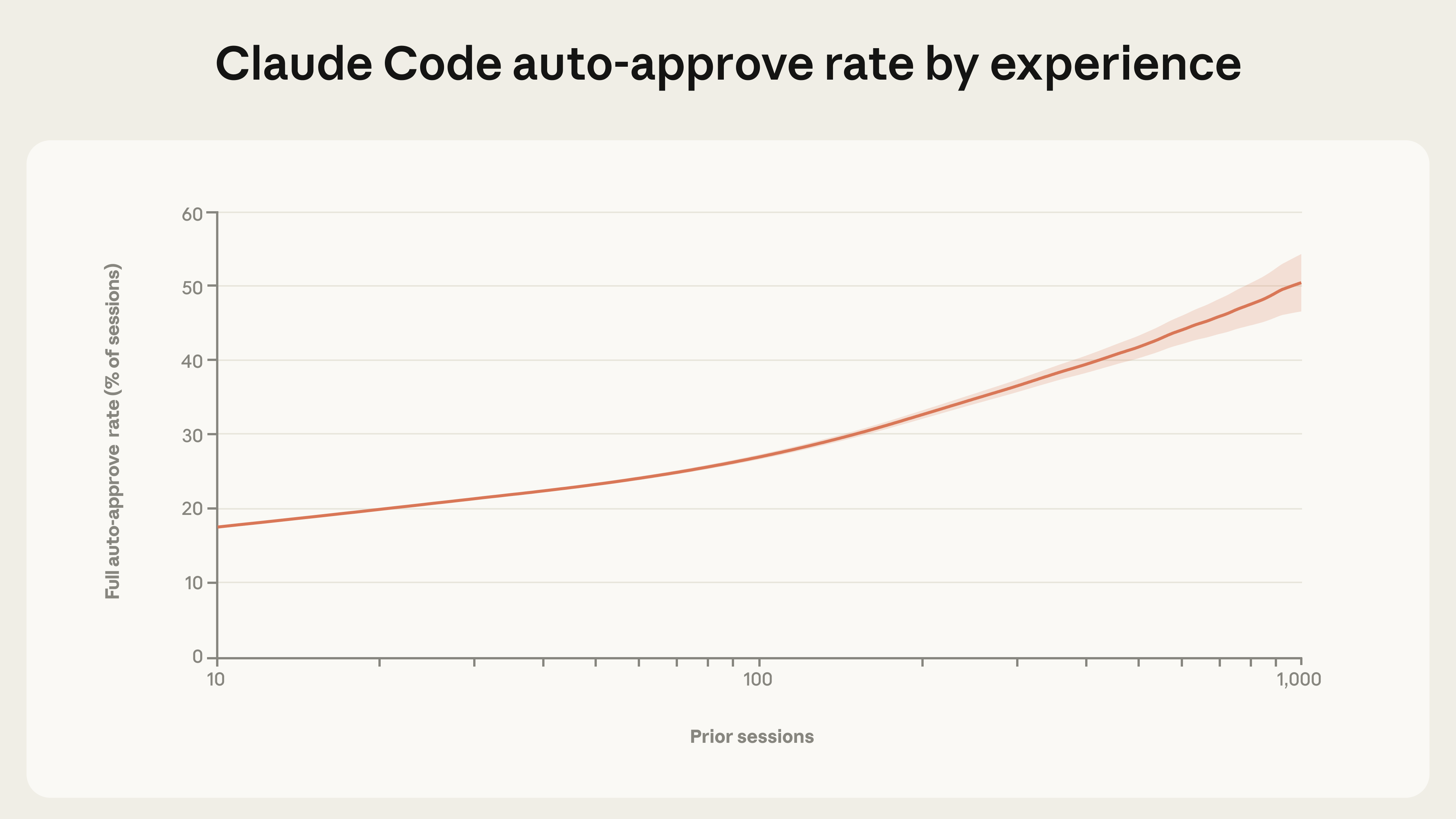

Auto‑Approve Usage Increases With User Experience New users with fewer than 50 sessions employ full auto‑approve in roughly 20 % of sessions, while veterans with 750 + sessions exceed a 40 % auto‑approve rate, showing rising trust in the agent’s decisions[1]. This pattern suggests that prolonged interaction encourages reliance on Claude Code’s autonomous actions[1].

Interruptions Shift From Approval to Monitoring Turn‑level interruptions climb from about 5 % for novices (≈10 sessions) to around 9 % for seasoned users, reflecting a transition toward monitoring rather than approving each action[1]. On the most complex goals, Claude Code initiates clarification pauses more than twice as often as humans interrupt, demonstrating self‑regulation of uncertainty[1].

Low‑Risk Engineering Focus and Improved Success Rates Nearly half of public‑API tool calls involve software‑engineering tasks, with 80 % of calls protected by safeguards, 73 % retaining a human in the loop, and only 0.8 % being irreversible, indicating predominantly low‑risk deployments[1]. Internal users saw the hardest‑task success rate double between August and December 2025 while average human interventions fell from 5.4 to 3.3 per session, highlighting the benefits of increased autonomy[1].

Related Tickers

Timeline

Apr 28 2025 – Anthropic’s Clio analysis of 500,000 Claude Code conversations shows the specialist coding agent automates 79 % of chats, far higher than the 49 % automation rate of the general‑purpose Claude chatbot, indicating a shift toward AI‑driven code execution[6].

Nov 2025 – Anthropic’s fourth Economic Index samples Claude Sonnet 4.5 conversations to add five “economic primitives” (task complexity, skill level, purpose, AI autonomy, success), providing the first systematic metrics of AI impact on work[3].

Late Sep 2025 – The 99.9th‑percentile turn duration for Claude Code sessions sits under 25 minutes, establishing a baseline before a rapid rise in user‑granted autonomy later in the year[1].

Aug – Dec 2025 – Internal Claude Code users double their success rate on the hardest tasks while human interventions per session fall from 5.4 to 3.3, demonstrating that increased autonomy improves outcomes[1].

Dec 2 2025 – A survey of 132 Anthropic engineers reports they now rely on Claude for ≈60 % of daily work, crediting the AI with a 50 % boost in self‑reported productivity and describing themselves as “full‑stack” developers handling front‑end, database, and API work they previously avoided[5].

Dec 2 2025 – Engineers note that “routine questions now go to Claude 80‑90 % of the time,” reducing mentor interactions and raising concerns about mentorship loss and social dynamics[5].

Dec 2 2025 – Internal logs reveal Claude Code tackles more complex, autonomous workflows: average task‑complexity score climbs from 3.2 to 3.8, consecutive tool calls per transcript rise 116 % (9.8 → 21.2), and human turns drop 33 % (6.2 → 4.1)[5].

Early Jan 2026 – The 99.9th‑percentile turn duration for Claude Code sessions exceeds 45 minutes, nearly doubling the Sep 2025 baseline and signaling that users grant the agent far greater autonomy rather than merely benefiting from model upgrades[1].

Early Jan 2026 – Users with 750 + sessions employ full auto‑approve in over 40 % of sessions, up from roughly 20 % for newcomers, reflecting growing trust in the agent’s decisions[1].

Early Jan 2026 – Turn‑level interruptions rise to ≈9 % for seasoned users (≥750 sessions) versus 5 % for novices, showing a shift toward monitoring rather than approving each action[1].

Early Jan 2026 – On the most complex goals, Claude Code initiates clarification pauses more than twice as often as human‑initiated interruptions, demonstrating the model’s self‑regulation of uncertainty[1].

Early Jan 2026 – Nearly 50 % of public‑API tool calls involve software‑engineering tasks, with 80 % of calls protected by safeguards, 73 % retaining a human in the loop, and only 0.8 % being irreversible, indicating that most deployments remain low‑risk[1].

Jan 15 2026 – Anthropic’s Economic Index reports 9‑12× speedups on high‑skill tasks (12–16 years of schooling) while success rates dip from 78 % to 61 %, showing AI delivers large productivity gains but with modest reliability losses on complex work[4].

Jan 15 2026 – Claude appears in 49 % of jobs (up from 36 % in Jan 2025) and disproportionately covers tasks averaging 14.4 years of education, suggesting AI is removing higher‑skill components and potentially desk‑skilling roles such as technical writers and teachers[3].

Jan 15 2026 – Adjusted productivity forecasts shrink to ≈1.0‑1.2 percentage‑points annually for US labor after accounting for task‑success probabilities, still representing a notable return comparable to late‑1990s growth rates[3].

Jan 15 2026 – The Gini coefficient for per‑capita Claude usage in US states falls to 0.32, and regression models predict state‑level parity within 2‑5 years, a diffusion speed ten times faster than past economically consequential technologies[4].

Jan 15 2026 – Augmented human‑AI collaborations rise to 52 % of Claude ai conversations (+5 pp), while fully automated interactions dip to 45 % (‑4 pp), reflecting the impact of new features like file creation and persistent memory[4].

Jan 29 2026 – A randomized trial with 52 junior developers finds AI assistance lowers immediate mastery on a new Python library, with quiz scores 17 % lower (50 % vs 67 %) and a statistically significant effect (Cohen’s d = 0.738, p = 0.01)[2].

Jan 29 2026 – The same trial shows AI‑assisted coders finish about two minutes faster on average, a speed advantage that fails to reach statistical significance, indicating modest productivity gains for novel tasks[2].

Jan 29 2026 – Interaction pattern analysis reveals that high‑scoring patterns (generation‑then‑comprehension, hybrid explanation, conceptual inquiry) achieve ≥65 % quiz scores, whereas low‑scoring patterns (full delegation, progressive reliance, iterative debugging) produce <40 %, underscoring the importance of active questioning when using AI[2].

Feb 18 2026 – Claude Code’s session‑length growth from <25 min (Sep 2025) to >45 min (Jan 2026) confirms that users increasingly grant the agent autonomy, a trend that aligns with rising auto‑approve rates and reduced human interruptions[1].

All related articles (6 articles)

-

Anthropic: Claude Code agents show growing autonomy and shifting user oversight

-

Anthropic: AI Coding Assistants Boost Speed but May Hinder Skill Mastery

-

Anthropic: Anthropic’s Fourth Economic Index Shows AI Boosts Complex Tasks but Raises Desk‑skilling Concerns

-

Anthropic: Anthropic Economic Index Jan 2026 Shows Faster US Diffusion and New AI Usage Metrics

-

Anthropic: AI‑driven productivity surge and growing pains at Anthropic

-

Anthropic: Anthropic Finds AI Coding Agent Drives Automation and Startup Adoption

External resources (59 links)

- https://economics.mit.edu/sites/default/files/2025-06/Expertise-Autor-Thompson-20250618.pdf (cited 3 times)

- https://digitaleconomy.stanford.edu/publications/canaries-in-the-coal-mine/ (cited 2 times)

- https://doi.org/10.3982/ECTA15202 (cited 1 times)

- https://doi.org/10.48550/arXiv.2412.13678 (cited 1 times)

- https://www.hbs.edu/ris/Publication%20Files/26-011_04dcb593-c32b-4e4e-80fc-b51030cf8a12.pdf (cited 1 times)

- http://claude.ai/redirect/website.v1.c4e491c3-b6b3-4cde-87d0-eb399505f2dd (cited 13 times)

- https://cdn.sanity.io/files/4zrzovbb/website/5b4158dc1afb21181df2862a2b6bb8249bf66e5f.pdf (cited 7 times)

- https://metr.org/blog/2025-03-19-measuring-ai-ability-to-complete-long-tasks/ (cited 3 times)

- https://academic.oup.com/qje/article-abstract/140/2/1299/7959830 (cited 2 times)

- https://arxiv.org/abs/2601.20245 (cited 2 times)

- https://metr.org/blog/2025-07-10-early-2025-ai-experienced-os-dev-study/ (cited 2 times)

- http://claude.ai/redirect/website.v1.15dbdb78-c435-459d-8b8a-e16305ff300e (cited 1 times)

- https://arxiv.org/abs/2302.06590 (cited 1 times)

- https://arxiv.org/abs/2311.02462 (cited 1 times)

- https://arxiv.org/abs/2407.01502 (cited 1 times)

- https://arxiv.org/abs/2412.13678 (cited 1 times)

- https://arxiv.org/abs/2502.02649 (cited 1 times)

- https://arxiv.org/abs/2503.14499 (cited 1 times)

- https://arxiv.org/abs/2506.12469 (cited 1 times)

- https://arxiv.org/abs/2507.09089 (cited 1 times)

- https://arxiv.org/abs/2512.04123 (cited 1 times)

- https://arxiv.org/abs/2512.07828 (cited 1 times)

- https://arxiv.org/pdf/2302.10329 (cited 1 times)

- https://arxiv.org/pdf/2401.13138 (cited 1 times)

- https://arxiv.org/pdf/2504.21848 (cited 1 times)

- https://arxiv.org/pdf/2507.07935 (cited 1 times)

- https://assets.anthropic.com/m/2e23255f1e84ca97/original/Economic_Tasks_AI_Paper.pdf (cited 1 times)

- https://assets.anthropic.com/m/6cd21f7d4f82afcb/original/Claude-at-Work-Survey.pdf (cited 1 times)

- https://claude.com/blog/create-files (cited 1 times)

- https://claude.com/blog/memory (cited 1 times)

- https://claude.com/blog/skills (cited 1 times)

- https://code.claude.com/docs/en/common-workflows#use-plan-mode-for-safe-code-analysis (cited 1 times)

- https://code.claude.com/docs/en/fast-mode (cited 1 times)

- https://code.claude.com/docs/en/monitoring-usage (cited 1 times)

- https://code.claude.com/docs/en/output-styles (cited 1 times)

- https://code.claude.com/docs/en/overview (cited 1 times)

- https://dl.acm.org/doi/book/10.5555/773294 (cited 1 times)

- https://docs.anthropic.com/en/docs/agents-and-tools/claude-code/overview (cited 1 times)

- https://github.com/anthropics/claude-code/blob/main/CHANGELOG.md (cited 1 times)

- https://github.com/anthropics/claude-code/issues/535 (cited 1 times)

- https://huggingface.co/datasets/Anthropic/EconomicIndex (cited 1 times)

- https://ieeexplore.ieee.org/document/9962584 (cited 1 times)

- https://job-boards.greenhouse.io/anthropic/jobs/4502440008 (cited 1 times)

- https://job-boards.greenhouse.io/anthropic/jobs/4555010008 (cited 1 times)

- https://metr.org/ (cited 1 times)

- https://newsletter.pragmaticengineer.com/p/how-claude-code-is-built (cited 1 times)

- https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4945566 (cited 1 times)

- https://papers.ssrn.com/sol3/papers.cfm?abstract_id=5713646 (cited 1 times)

- https://platform.claude.com/docs/en/api/overview (cited 1 times)

- https://red.anthropic.com/2026/zero-days/ (cited 1 times)

- https://simonwillison.net/2025/Sep/18/agents/ (cited 1 times)

- https://support.claude.com/en/articles/13345190-getting-started-with-cowork (cited 1 times)

- https://www-cdn.anthropic.com/e5645986a7ce8fbcc48fa6d2fc67753c87642c30.pdf (cited 1 times)

- https://www.michaelwebb.co/webb_ai.pdf (cited 1 times)

- https://www.nature.com/articles/s41598-025-98385-2 (cited 1 times)

- https://www.nber.org/papers/w32966 (cited 1 times)

- https://www.nber.org/papers/w33509 (cited 1 times)

- https://www.nber.org/papers/w34639 (cited 1 times)

- https://www.nber.org/system/files/working_papers/w32966/w32966.pdf (cited 1 times)