AI Coding Assistants Accelerate Tasks Yet Reduce Junior Developers’ Learning Gains

Updated (5 articles)

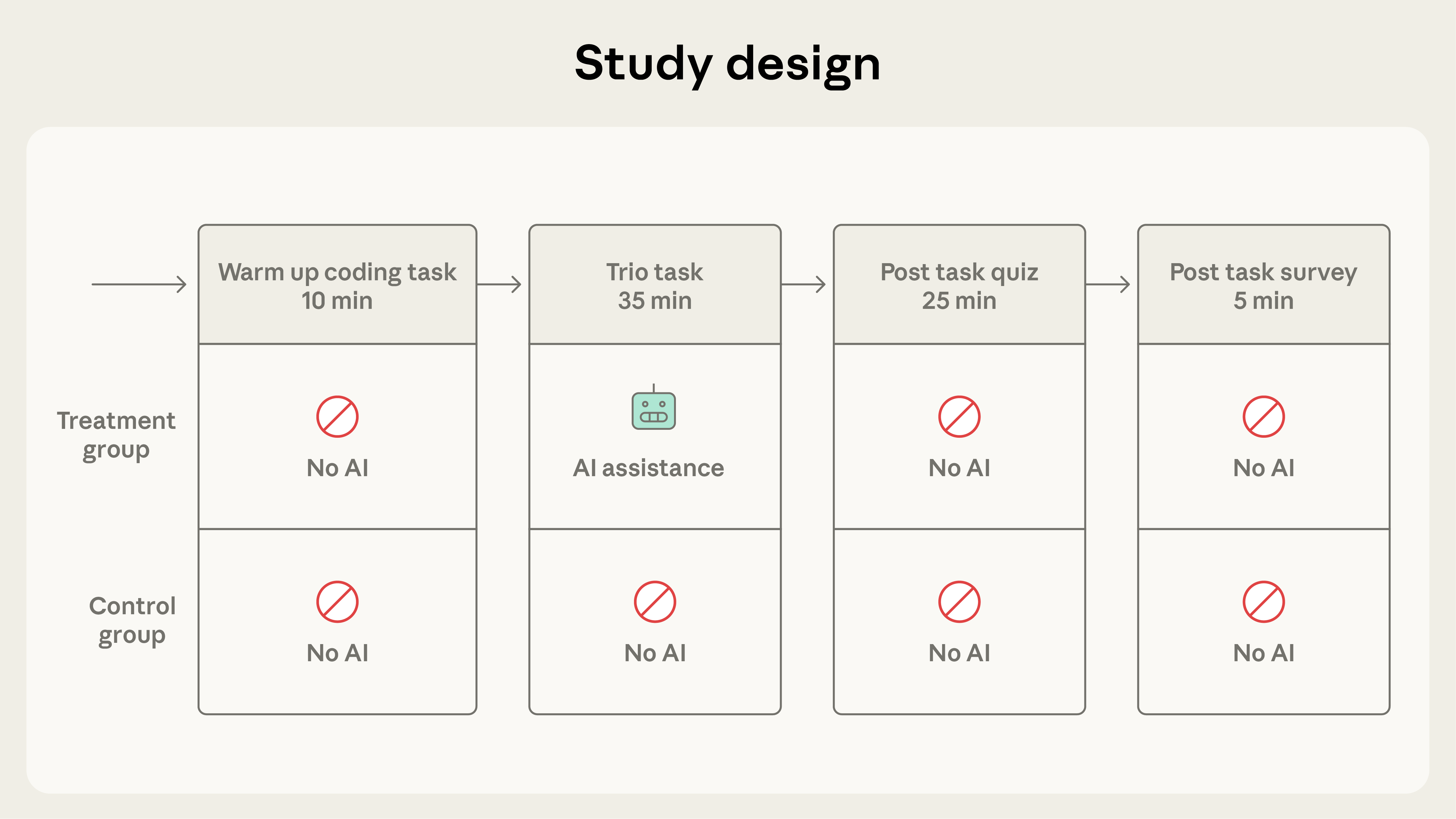

Study Design Compared AI Assistance to Manual Coding Researchers at Anthropic recruited 52 junior developers with at least one year of Python experience on 2026‑01‑29 and randomly assigned them to an AI‑assisted group or a hand‑coding control group for a two‑feature task using the asynchronous Trio library, followed by a comprehension quiz [1].

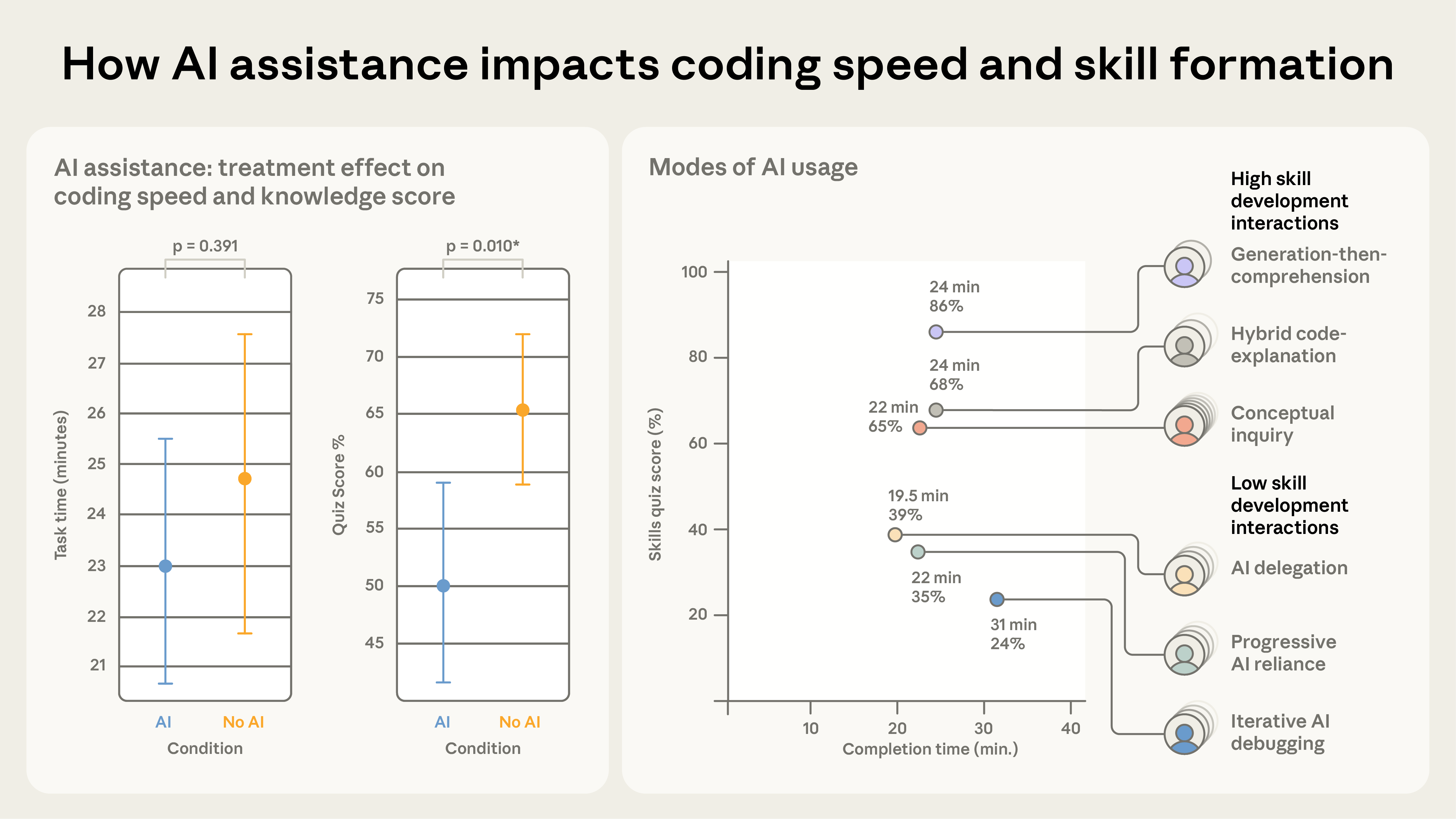

AI Assistance Cut Task Time but Not Significantly Prior observations suggested up to an 80 % speed boost, yet the trial showed only a modest ≈2‑minute reduction in total task duration for the AI group, a difference that failed to reach statistical significance [1].

Participants Using AI Scored Lower on Knowledge Quiz The AI‑assisted cohort averaged 50 % correct answers versus 67 % for the manual group, a 17 % gap equivalent to nearly two letter grades (Cohen’s d = 0.738, p = 0.01) [1].

Interaction Patterns Determined Learning Outcomes and Time Allocation High‑scoring users blended code generation with conceptual queries, while low‑scoring users relied heavily on delegation or iterative debugging; some participants spent up to 30 % of session time (≈11 minutes) crafting up to 15 queries, explaining the limited productivity gain [1].

Timeline

Apr 28, 2025 – Anthropic’s CLIO analysis of 500,000 coding interactions finds Claude Code automates 79% of chats versus 49% for Claude.ai, with feedback‑loop (35.8% vs 21.3%) and directive (43.8% vs 27.5%) tasks far more common, indicating specialist agents shift work from human‑assisted to AI‑performed [5].

Apr 28, 2025 – The same data show developers focus on JavaScript/TypeScript (31%) and HTML/CSS (28%) for front‑end UI work, while Python (14%) and SQL (6%) cover back‑end tasks; startups generate 32.9% of Claude Code sessions versus 23.8% for enterprises, highlighting early‑stage adoption patterns [5].

Dec 2, 2025 – A survey of 132 Anthropic engineers reports Claude now handles 60% of daily work and self‑reported output rises 50%, with staff saying they feel “full‑stack,” tackling front‑end, database, and API tasks they previously avoided [4].

Dec 2, 2025 – Usage logs reveal Claude Code’s average task complexity climbs from 3.2 to 3.8, tool calls per transcript jump 116% (9.8→21.2), and human turns fall 33% (6.2→4.1), showing reduced oversight even on harder problems [4].

Dec 2, 2025 – Engineers estimate 27% of Claude‑assisted work would not have been done otherwise, and routine queries now go to Claude 80‑90% of the time, raising concerns that junior staff seek fewer mentor interactions and mentorship dynamics may erode [4].

Dec 2, 2025 – Despite short‑term optimism, a notable share of staff voice anxiety about long‑term career relevance, prompting internal discussions of new career pathways and policy responses [4].

Jan 15, 2026 – Anthropic releases its fourth Economic Index, adding five “economic primitives” (task complexity, human and AI skill levels, purpose, AI autonomy, success) derived from November 2025 Claude conversations to better quantify AI’s work impact [2][3].

Jan 15, 2026 – The Index shows Claude speeds up high‑school tasks ninefold and college tasks twelvefold, while success rates dip for higher‑skill tasks (66% vs 70%), confirming productivity gains concentrate in high‑human‑capital work and raising deskilling concerns [2].

Jan 15, 2026 – Task‑time analysis indicates 50% success at 3.5 hours for Anthropic API data versus 2 hours for METR benchmarks and up to 19 hours for Claude.ai, reflecting user‑driven task decomposition and selection bias [2].

Jan 15, 2026 – Geographic diffusion data reveal usage per‑capita tracks GDP per‑capita; the US Gini coefficient falls from 0.37 to 0.32, suggesting usage parity could be reached in 2–5 years, a rate ten times faster than 20th‑century technologies [3].

Jan 15, 2026 – Revised productivity estimates lower AI’s contribution to US labor‑productivity growth to about 1.0–1.2 percentage points annually, with elasticity scenarios ranging from 0.7 pp (complements) to 2.6 pp (substitutes) [3].

Jan 29, 2026 – A randomized trial with 52 junior developers learning the Trio library finds AI‑assisted participants finish the coding task about two minutes faster but score 17% lower on a post‑task quiz (50% vs 67%), showing modest speed gains without learning benefits [1].

Jan 29, 2026 – High‑scoring AI users combine code generation with conceptual queries, while low‑scoring users rely heavily on delegation and iterative debugging, resulting in quiz scores below 40% and highlighting the importance of interaction style for learning outcomes [1].

Jan 29, 2026 – Screen‑recordings show AI users devote up to 30% of session time (≈11 minutes) crafting up to 15 queries, explaining why overall productivity gains are modest and underscoring a trade‑off between efficiency and skill development [1].

Jan 29, 2026 – Researchers warn aggressive AI deployment can erode debugging ability and broader code comprehension, urging managers to design tools and policies that preserve intentional learning while leveraging AI’s speed benefits [1].

All related articles (5 articles)

-

Anthropic: AI Coding Assistants Boost Speed but May Hinder Skill Mastery

-

Anthropic: Anthropic’s Economic Index Shows AI Boosts Complex Tasks but Raises Deskilling Concerns

-

Anthropic: New Anthropic Economic Index Report Highlights AI Usage Patterns and Revised Productivity Estimates

-

Anthropic: AI‑driven productivity surge and growing pains at Anthropic

-

Anthropic: Anthropic Finds AI Coding Agent Drives Automation and Startup Adoption

External resources (37 links)

- https://economics.mit.edu/sites/default/files/2025-06/Expertise-Autor-Thompson-20250618.pdf (cited 3 times)

- https://digitaleconomy.stanford.edu/publications/canaries-in-the-coal-mine/ (cited 2 times)

- https://doi.org/10.3982/ECTA15202 (cited 1 times)

- https://doi.org/10.48550/arXiv.2412.13678 (cited 1 times)

- https://www.hbs.edu/ris/Publication%20Files/26-011_04dcb593-c32b-4e4e-80fc-b51030cf8a12.pdf (cited 1 times)

- http://claude.ai/redirect/website.v1.6d1f8f70-297e-4874-bd84-fb6df71a68a4 (cited 8 times)

- http://claude.ai/redirect/website.v1.b6923fba-26b4-4363-8e80-0cc1d883128d (cited 6 times)

- https://academic.oup.com/qje/article-abstract/140/2/1299/7959830 (cited 2 times)

- https://arxiv.org/abs/2601.20245 (cited 2 times)

- https://metr.org/blog/2025-03-19-measuring-ai-ability-to-complete-long-tasks/ (cited 2 times)

- https://metr.org/blog/2025-07-10-early-2025-ai-experienced-os-dev-study/ (cited 2 times)

- https://arxiv.org/abs/2302.06590 (cited 1 times)

- https://arxiv.org/abs/2412.13678 (cited 1 times)

- https://arxiv.org/abs/2503.14499 (cited 1 times)

- https://arxiv.org/abs/2507.09089 (cited 1 times)

- https://arxiv.org/pdf/2507.07935 (cited 1 times)

- https://assets.anthropic.com/m/2e23255f1e84ca97/original/Economic_Tasks_AI_Paper.pdf (cited 1 times)

- https://assets.anthropic.com/m/6cd21f7d4f82afcb/original/Claude-at-Work-Survey.pdf (cited 1 times)

- https://claude.com/blog/create-files (cited 1 times)

- https://claude.com/blog/memory (cited 1 times)

- https://claude.com/blog/skills (cited 1 times)

- https://code.claude.com/docs/en/output-styles (cited 1 times)

- https://docs.anthropic.com/en/docs/agents-and-tools/claude-code/overview (cited 1 times)

- https://huggingface.co/datasets/Anthropic/EconomicIndex (cited 1 times)

- https://ieeexplore.ieee.org/document/9962584 (cited 1 times)

- https://job-boards.greenhouse.io/anthropic/jobs/4502440008 (cited 1 times)

- https://job-boards.greenhouse.io/anthropic/jobs/4555010008 (cited 1 times)

- https://metr.org/ (cited 1 times)

- https://newsletter.pragmaticengineer.com/p/how-claude-code-is-built (cited 1 times)

- https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4945566 (cited 1 times)

- https://www-cdn.anthropic.com/e5645986a7ce8fbcc48fa6d2fc67753c87642c30.pdf (cited 1 times)

- https://www.michaelwebb.co/webb_ai.pdf (cited 1 times)

- https://www.nature.com/articles/s41598-025-98385-2 (cited 1 times)

- https://www.nber.org/papers/w32966 (cited 1 times)

- https://www.nber.org/papers/w33509 (cited 1 times)

- https://www.nber.org/papers/w34639 (cited 1 times)

- https://www.nber.org/system/files/working_papers/w32966/w32966.pdf (cited 1 times)